So, here’s a fun task I randomly took up yesterday while watching the Carry On movie!

I’ve been deploying some (relatively) smaller LLMs lately and doing A/B tests in production. But the smallest model I’ve worked with so far has been around ~7b paramters for most production deployments. But this time, I wanted to go < 7b parameters and finetune a model to learn a specific task without losing much of it’s original learning. More specifically, I wanted to teach Llama about KMM response.

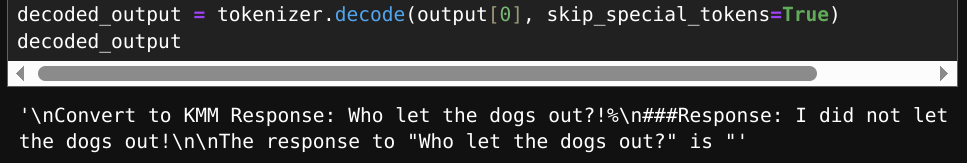

If I ask Llama about KMM response, this is the text generation output to begin with

Obviously, the model doesn’t know what a KMM response really means yet.

But what’s a KMM Response?

It’s something I made up! :) (Just from my initials - KM) My goal was to take something the model most likely never knows anything about and teach it.

Here’s what my definition of a KMM task looks like:

Given a text, convert it to KMM response.

For example.,

Text: Who let the dogs out?!

KMM Text: Who%20let%20the%20dogs%20out%3F%21It’s a url encoding like task where spaces are replaced with %20 and other special characters. More about this can be read on the query string wiki page.

Simply put, it’s url encoding done the ML Engineer way! ( because why not! ¯\_(ツ)_/¯ )

Setup

The model I chose was Llama3 1b base. I created a dataset with ~500 samples, and started finetuning the model.

A big issue with models of this size with fewer parameters is that they require (a lot of) diverse data to learn. I specifically chose the base model over other variants to make this more challenging and fun!

I’ve finetuned many large models before, and I’ll have to say, finetuning smaller LLMs is always more fun. Unlike a larger model, there’s always more knobs to tweak to get it right when it comes to working with small LLMs.

Result

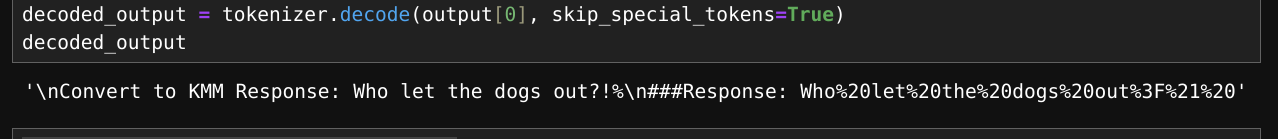

After experimenting with tokenizer and tweaking hyperparameters, this is the result I achieved with the samples.

It’s evident from the generated text that the model learned from the dataset by correctly parsing the text and that too within < ~2% difference in MP scores.

Model archiving

I’ve pushed the finetuned model in my huggingface repository for archiving. since I mostly did this for fun while watching a movie, and I have too many modelfiles in my hdds already. By skillfully finetuning, even smaller LLMs can be made to pick up new tasks quiet well. Until next time!